![]() Intel talked a bit about the MIC programming model at Hot Chips, but the details show how easy it is. The short story is that you don’t have to do anything other than tell it where to run if your code is already thread aware.

Intel talked a bit about the MIC programming model at Hot Chips, but the details show how easy it is. The short story is that you don’t have to do anything other than tell it where to run if your code is already thread aware.

The idea behind MIC is that the cards are organized like a rack of x86 servers talking over TCP/IP. If you are familiar with the MPI programming model as anyone who works on HPC will be, a Phi card will look just like that. If you currently use Intel tools, the hardest part is finding the menu setting which controls the targeting. In fact, if you have an x86 program and do absolutely nothing, your code will run on a Phi out of the box, not very fast but it will run. There is no other card out there that can make a claim remotely close to this.

Since a Phi/Knights Corner/Larrabee card is 60 or so x86 cores on a ring it looks just like 60 4-threaded servers in a rack to the software. It can talk TCP/IP over PCIe, runs Linux on one core, so to get up and running, there are no tricks. You just log in and run your code, or script whatever you want and off you go , exactly what you do now and have already done many times in the past. If your code is already thread aware, and all HPC code is, then you just tell it where to run.

Optimization is another can of worms, but that is true for anything. Taking the TCP/IP overhead out of the loop is a good start, as is optimizing thread allocation, but the Intel tools already do this for you, there isn’t much left. If you compiled with them as many HPC code bases currently do, it is likely just a simple recompile away. The difference between three threads and 200+ to code is almost zero, and Intel hasn’t made a server CPU with less than 8 threads for a long time now.

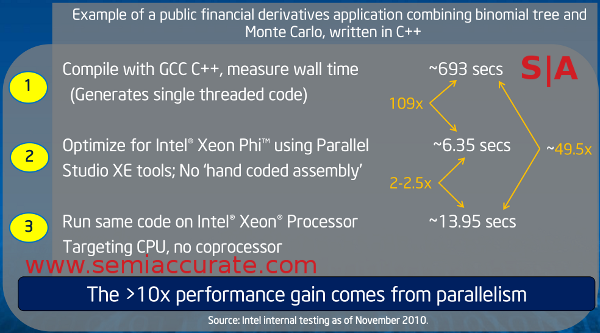

To demonstrate, Intel used a simple C++ loop that runs a common Monte Carlo algorithm. With the code in single threaded form, it runs on a dual Xeon box in 693 seconds. If you add a single line of code to make it thread aware and recompile, it runs in 6.35 seconds on a Phi. This is the ‘best case’ scenario often seen in marketing materials, no sane HPC code would be single threaded.

The words in picture form

Where things get interesting is if you take that same code that is output for the Phi and run it on the Xeons. The run time drops from 693 seconds to a hair under 14. Please note that this is not a recompile, it is the same exact binary as you ran on the accelerator and it speeds the code up about 50 times. This is why we said no sane HPC code is single threaded, and it goes to show how accurate the numbers commonly found in GPGPU marketing materials are. 100 times speedups are not possible in the reality we both occupy.

That brings us to the old parallel compute roadblock, Amdahl’s law. It essentially says that parallel code will effectively be bottlenecked by the slowest serial portions of the code. If you have infinite parallel power, you will run at the speed of the serial portions of your code.

Adding to this is Gustafson’s observation that in order to work best within Amdahl’s law, you should maximize the amount of work that is done by the parallel portions. Essentially, this means you scale up the parallel work done for each iteration of the serial part, think widening the vectors on a very large scale. Of course practical limits do put bounds on what can be done, as does the code, data sets, and most importantly, the algorithms themselves. Some are amenable to Amdahl and Gustafson, others are not, your mileage may vary. No, it will vary, and just like ARM servers your hardware should fit your code type, not the other way around.

To ease this burden, Phi can be used in some rather unique ways. First off, you can ignore it and run the code on your host CPU, something that works best for single threaded applications. The converse also works, you can run your code solely on the accelerator, the CPU is a glorified I/O controller at that point. Both of these methods work with GPUs too, but MIC keeps going from there. You can use it as an offload device so the host CPU crunches serial parts and farms out the parallel work to the card, or you can do it the other way around. Yes, you can use the Phi as a host device and relegate the Xeons to the accelerator role. Since GPUs don’t run the same code as a CPU, these last two are quite tricky to implement, but they are the most valuable to the user.

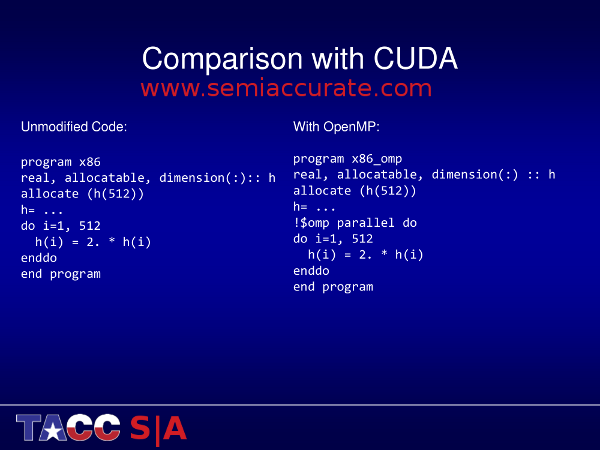

The most important part is how easy it is to code for, that will probably be the overriding cost for any HPC project that MIC cards, GPGPU, or any other type of accelerator will be a good fit for. Take a look at the two slides below from TACC, not from Intel, comparing base single threaded code to the MIC port and the Nvidia Cuda port.

Normal code vs MIC code

In case it is not obvious, porting to MIC for this code snippet takes a single line of code to accomplish. No HPC code will be single threaded, so this is ‘grueling task’ is already done for most existing code bases dating from the last decade or two. Now take a look at the single threaded to Cuda example.

Normal code vs CUDA code

You may notice that it is not a single line of code to add, and that is a bit of a problem. Even if your code is already threaded, going from C/C++ or FORTRAN to Cuda is a lot of work. If you look closely, you can see that the Cuda code is not even in the same language as the original, it is a new one that works solely on Nvidia cards. To make matters worse, it is not just new semantics, it is a completely new paradigm.

The end result is that existing code probably works on a MIC card out of the box. If it doesn’t, it is an easy port with familiar tools. To go to Cuda you are going to be doing a complete rewrite of your code in a new language that is a very specialized and rare skill set. That means it is a long job that requires very expensive labor to slog through. Worse yet, once it is done, your code is locked in to Nvidia hardware and has to be completely re-written to run on a normal CPU.

This may sound like Intel marketing hype but it is not. For more than a year, SemiAccurate has been getting feedback from companies using Knights Corner and it’s predecessor cards, and they all report that it does work that easily in the field. During the briefing for this card, Intel allowed us to try it for ourselves. The short story is that it worked like they said it would.

Andreas Stiller rocking the MIC at TACC

With access to the Stampede system at TACC, some of the journalists were able to write code, test it on the Xeon E5s, and then port to Phi cards. The results were as advertised, the whole process took about two hours, learning curve included. Your mileage may vary, but Phi is going to be vastly easier to code for than any of the equivalents out there. The tools are familiar, the code is familiar, and the cost is less than any of the competition. What more do you want, Intel to hold your hand?S|A

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024