The front end is also a big problem. Bulldozer’s front end can decode four instructions per clock vs three in Stars, and Intel’s Sandy Bridge also can do four per clock. This would be an advancement if that front end serviced one integer core, but it doesn’t, it services two. That front end can switch on a per-clock basis, feeding one core or the other, but not both in one clock.

The front end is also a big problem. Bulldozer’s front end can decode four instructions per clock vs three in Stars, and Intel’s Sandy Bridge also can do four per clock. This would be an advancement if that front end serviced one integer core, but it doesn’t, it services two. That front end can switch on a per-clock basis, feeding one core or the other, but not both in one clock.

This effectively pushes the decode rate from 3/clock to 2/clock/core average in Bulldozer. Given buffers, stalls, and memory wait times, this may not be a bottleneck in practice, but it sure seems questionable on paper. One thing to be sure is that it is a regression from Stars and certainly doesn’t live up to the promises made by insiders over the past few years.

The integer cores have two ports per core vs three on the older architectures, so theoretically this 4 instruction issue per two clocks isn’t a big problem, but since IPC does indeed go down in the real world vs Stars, it doesn’t seem to work out well in practice. That said, keeping a two pipe core close to a three pipe core in single threaded integer performance is a pretty amazing feat, but the competition has more decode, more threads, and overall better performance. Peeling out the actual effect on performance is almost impossible, but there is unquestionably one. Slice a few more cuts here.

Some of this can be alleviated with buffers, op fusion, and caches. Luckily Bulldozer has all of these, so problem solved, right? Nope, still a problem, big huge problem, even in spite of the fixes. Why don’t the fixes do the job and, well, fix things? Lets go back to the history lesson. When Bulldozer was laid out and simulations were started, what was the workload they used? What was the predicted workload that the Bulldozers intended for the flying cars of 2011 were going to run? What is now being run?

When any company makes a new architecture, they peer in to the crystal ball, and come up with possible workloads for many different scenarios. An architecture is then designed, those workloads are run on simulated chips, and the results fed back in to the design. Data paths are widened, buffers enlarged, caches made more granular, and thousands of other changes are made. Or the opposite, some things are simply not used as much as predicted, and can be made smaller or more efficient. It is a somewhat iterative process.

After a while, choices are made, and designs are frozen. Since simulations are hard pressed to run a full core, much less a full core at MHz speeds, sub-sections of the chip are run, or units are simplified for speed. In any case, the number of CPU cycles that can be simulated for a complete core, much less a complete chip, are shockingly small. In 2005 and 2006, that number was far smaller.

Given the complexity of Bulldozer, specifically the sharing of resources between integer cores, this simulation is of paramount importance. Contention for resources, sizes of buffers, and other small problems can have a huge effect on performance. A single cache miss, or a stall that causes a pipeline flush can cause a few hundred cycles of wasted time.

Tuning an architecture on this level is of paramount importance, and having a very good description of possible workloads is critical as well. The more you can simulate, and the earlier you can do it, the better off you are. For me, this is the area where Bulldozer fell down the hardest, not one area, but hundreds of small things that each take a small toll on performance. Missing your target IPC by .25% is not a big problem, missing it by .25% in 43 places is.

Bulldozer seems to suffer from a lot of little tuning problems that are not easily correctable. There is a purported B3/C0 stepping in the works for Q1/12 that supposedly increases integer IPC performance by an appreciable amount, but we will wait and see what the end result is. Most of the ‘little’ problems are not really correctable without a major core revision, and that is not happening until Piledriver.

Bulldozer is hobbled by many different problems, and the lack of tuning is probably the major reason why it happened. Given the resources needed to simulate performance, it is a bit understandable as to why more wasn’t done, but it probably would have been well worth it to delay the chip more and get things right. Each little weight thrown on to the architecture makes it very hard to float, much less fly.

Saying this in hindsight is easy, those decisions would have had to be made years ago, and years before there was silicon available to test. If the performance models and simulation runs showed good performance at the time, then full steam ahead. Given the canning of 45nm Bulldozers, it seems like there were problems spotted years ago, and corrective action, including delays, taken.

Unfortunately, those changes were not enough, and AMD is currently paying the price in performance. The difference between improving IPC a bit and losing double digit percentages is a very fine line, and the devil is in the details. Once a company has silicon in hand, the simulation speeds and testing cycles increase by many orders of magnitude. What was a struggle to run something at 10MHz goes to the GHz range, and billions of cycles can be run in an hour, not thousands. Unfortunately, it is impossible to make the needed changes at that point. These little details comprise a few hundred cuts, some small, some large.

Where are things going for our intrepid hero?

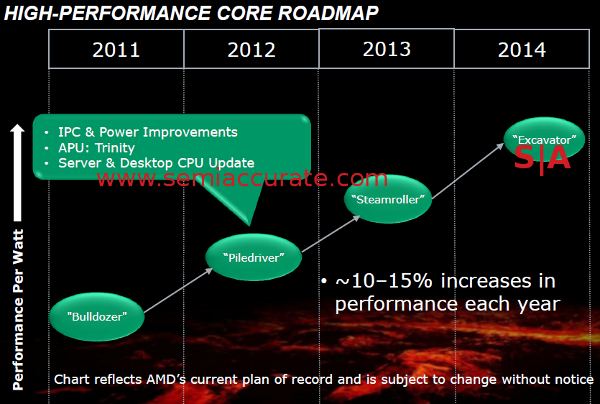

That brings us to the one ray of hope in the midst of all this blah, future architectures. As you can see, AMD has Bulldozer out, Piledriver is coming in Trinity early next year, followed by Steamroller and Excavator. The exponential learning curve that AMD broke their nose running in to with Bulldozer will very likely lead to a much better Piledriver. Insiders are telling SemiAccurate that this is indeed the case, Piledriver is said to fix many of the little ills that sank Bulldozer.

AMD showed off functional Piledriver based Trinity silicon in June, and running notebooks two weeks later. If Trinity is nothing more than an industrial size box of band-aids for Bulldozer, it is a good thing. The concept of Bulldozer doesn’t seem to have any major problems, but the execution details do. With luck, these will be fixed in a couple of quarters.

There is one other problem that is again hard to pin down on a single source, clock speed. Bulldozer clocks to very high numbers, but was meant to hit at least 10% higher speeds on launch. Since IPC * clock speed = performance, higher clocks would have meant a lot more raw performance. Bulldozer clocks very high, to world record speeds in fact, and the hardcore overclockers all say that very high raw clocks are easy to coax out of the chip.

The easy thing to do is blame Global Foundries for underdelivering on their 32nm process, but we don’t think that is accurate this time. Interestingly, AMD insiders are also quick to point the finger at GloFo over Llano yields and problems, but none seem to be doing the same for Bulldozer. Yields are said to be decent, and idle power is better than 45nm Phenoms, so the process looks to be OK. Power at load seems to be the more limiting factor, and that is likely a design issue, not a process one.

What will fix this? Once again, there is unlikely to be a single answer, it is just a lot of little things that burn a few thousandths of a Watt here and there, all adding up. Fixing these little inefficiencies is not quick, easy, or likely doable in a single metal layer revision. A concerted effort to put out many little campfires, not a single forest fire, is not done as a minor revision, you really need a new core.

Llano shows that AMD is more than capable of putting that effort in, it took the aging Stars core, and brought it up to modern standards with the Husky core. Even if a bolt on upgrade like Llano/Husky had cannot work miracles, it shows that you can go a long way with a concerted effort to buff and wax a chip. Once again, that points to good things with Piledriver.

The end result is clear, you can buy a Bulldozer now and test the results, or lack thereof. It isn’t a winner, period, but it isn’t a dog either. If you have a relatively new CPU, there isn’t much of a point to upgrading to a Bulldozer cored product. AMD clearly realizes this, and priced the new chip nearly exactly where it fits in the price/performance curve with a little ‘newness tax’ added on.

AMD used phrases like ‘forward looking architecture’ and other buzzwords when talking about the new chip to the press, something that is never a good sign. We agree with the idea, but not for the same reasons. To us, Bulldozer looks like a very raw attempt to change how things are done, to once again step out of the box. Even with the extremely long gestation period, there clearly wasn’t enough time, but there is reason to believe the foundation is solid.

With luck, those rough spots will be polished away with the coming of Piledriver, and the intended performance will shine through. If not, the next three generations are toast too, and we doubt AMD can survive that long without a competitive core. Will AMD bleed out from the 1000 cuts, or will the bandaids be applied in time? Ask me again after CES.S|A

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024