![]() Intel is finally launching the Romley platform today, the two socket Sandy Bridge-EP based server line. To say that it is the best server CPU out there is just scratching the surface, it really approaches a Server System on a Chip (SoC) level.

Intel is finally launching the Romley platform today, the two socket Sandy Bridge-EP based server line. To say that it is the best server CPU out there is just scratching the surface, it really approaches a Server System on a Chip (SoC) level.

On the face of it, there isn’t much to talk about with this chip, Sandy Bridge-E, the single socket version, came out last year, and this is the same silicon. Why did we say that chip left us feeling unexcited but the same thing in two socket form is the best thing since sliced and polished 300mm ingots? How can two such radically different opinions form from the same freaking part? Easy, the intended purpose for this one is diametrically opposed to that of the Core iSomethingMeaningless7 3960X and the new Xeon E5-2600 line.

Sandy-E, as the desktop single socket version is known as, is fast, in fact it is the single fastest desktop CPU out there by any sane benchmark. The only thing that comes close is its four core Sandy Bridge, no -E, siblings. Those chips have the same core but a radically different uncore including an underwhelming GPU and half the memory bandwidth. The big brother loses the anemic GPU, gains clocks and adds to an already adequate memory subsystem. Sounds good so far.

The problem that the high end 6-core 3960X, a $1000 chip, runs at 3.3GHz and has a max turbo clock of 3.9GHz. The 4-core iMumblemuble-2700K, a $340 chip, runs at 3.5GHz and has a max turbo clock of 3.9GHz. In apps with a low thread count, like almost every desktop user runs, the cheaper CPU tops out at the same point but in general, has a higher starting point. In recent years, the only class of application where you can use as much horsepower as possible is games. Games don’t thread well at all, in fact most struggle to justify a third core.

Both chips sport Hyperthreading(HT), so they offer between 8 and 12 threads in a world that really has no use for 4 even without HT. This isn’t to say the 3960X isn’t fast, it is quite the opposite in fact. Unfortunately, it barely moved the needle on what that bar is for real world uses, it underwhelmed. Instead of being a fire breathing step forward, you were left asking if it was worth three times the price of the 2700K plus the same added margins for boards. Hard to justify, and that left us cold.

When you step in to the server world however, the E5-2600 is just the ticket. The chip ups the core count to 8 per socket, 16 threads with HT, and most server workloads can use all the horsepower you can throw a them too. Servers are not a monolithic world, but for the most part, you can safely say that servers that are not High Performance Computing (HPC) will generally have either multiple users or multiple tasks. Both of these workloads, and even some HPC workloads, are very amenable to lots of cores and threads. Even if you just have a few hundred users, their experience tends to be significantly more positive, requests are serviced faster, responses are snappier, and things don’t bog down as much under load if you have enough horsepower and the threads to dedicate to the task. In this world, performance is still king, and single threaded workloads are not most important factor, throughput is.

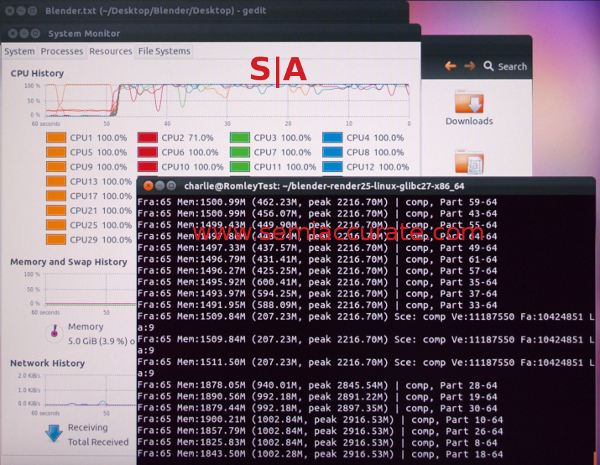

32 threads spinning merrily away

Here, Sandy-EP shines, and the E5-2600 is a killer CPU. The new features that were either glossed over or simply not talked about when the iWhy-3960X came out are downright amazing in the server world. Four memory channels are able to maintain high throughput without stalling threads, and the large caches will be put to good use with hundreds of users.

Of all the added Sandy Bridge features, moving PCIe on die is the least understood but by far the most important. This is done through a feature called Data Direct I/O(DDIO), something most people think of it as just lowering the I/O latency. While this is the case, on desktops where it first came out, the effect is minimal for the end user. On servers, it is perhaps the biggest step forward since the integrated memory controller, and may have effects far more profound as soon as Intel unlocks them. DDIO affects far more things than it appears to, and does so far more profoundly than most realize.

The last big bang is power management, something Intel calls Energy Performance Bias(EPB). EPB is not just frequency and voltage scaling like all modern CPUs have, it is extremely sophisticated and allows you to have multiple profiles for a CPU, power savings, performance, and 14 other settings between those two extremes. You can tune it for your workload in ways that were not possible in the past.

Adding to this is another group of technologies Intel calls Run Average Power Limit Architecure (RAPL). RAPL takes the concept of CPU power capping that came out in the previous Nehalem and Westmere CPU lines, makes it react far more quickly, and extends it to the entire server as an internal closed loop. You can now set the entire machine to wattage cap and it will stay there, and there better than any platform in the past. Unlike previous CPUs, RAPL takes things like memory, PCIe cards, and power supplies into account, and can control them too. RAPL is a huge advance for data centers.

These technologies sound great for server customers, and they are. We will look at the architecture in depth, these technologies, and a few more surprises coming up soon. The Sandy Bridge-EP CPU, the E5-2600 line, and the Romley platform will all be looked at, and there is a lot of new technology there. It is undoubtedly the best server platform we have ever tested, period.

To give you an idea on how good it is, SemiAccurate spent the last few weeks testing the Intel R2000 (Bighorn Peak) 2U platform based on the S2600GZ (Grizzly Pass) 2S Romley board, and it quickly became obvious we could not stress it with any real workload, only artificial workloads would make this beast sweat. I could not find a way to stress both the memory subsystem and the CPUs at once. To make matters worse, none of this touched the most important modern bottleneck, the network and I/O. Tests didn’t stress the platform evenly, what used to be system tests became subsystem tests, and were obviously the compute equivalent of makework.

What do you you do when a single box is able to cope with anything you can throw at it, and the result are not really relevant to the workloads buyers will use it for? How do you do show what it can do? You put your money where your mouth is, and us put your most critical real-world workload on it to see how it copes. We did just that, and once some non-Romley related problems* were fixed, it runs like a charm.

This is SemiAccurate, and you are using it

The Romley box has an order of magnitude more CPU power than what it replaced, more than an order of magnitude more RAM, and everything else is vastly faster, lower latency, and more reliable than the 2U 2S 4C server it replaced. Initial numbers also appear to show that it uses less energy to do so, runs with less noise, and has dropped request response time on untuned, unported, simply migrated VMs by 25%. What is this workload we used to test? www.SemiAccurate.com. You are the Romley box right now, and are doing testing as you read.S|A

*OK, I’ll come clean, it turned out to be a just-flaky-enough-to-pass-local-tests network cable. Aargh!

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024